While Tesla vehicles drive on public roads with human drivers in control, their autonomous systems are quietly learning. Tesla’s “shadow mode” works like a co-pilot that constantly watches and learns without ever touching the steering wheel. The system runs the vehicle’s sensors and processes what it “sees,” then compares its decisions to what the actual human driver does.

Shadow mode doesn’t control the car at all. Instead, it makes simulated driving decisions and flags moments when its predictions differ from the driver’s actions. These differences, called edge cases, help Tesla’s engineers comprehend where their self-driving algorithms need improvement. The system fundamentally learns by watching over 100,000 daily automated lane changes and other driving scenarios.

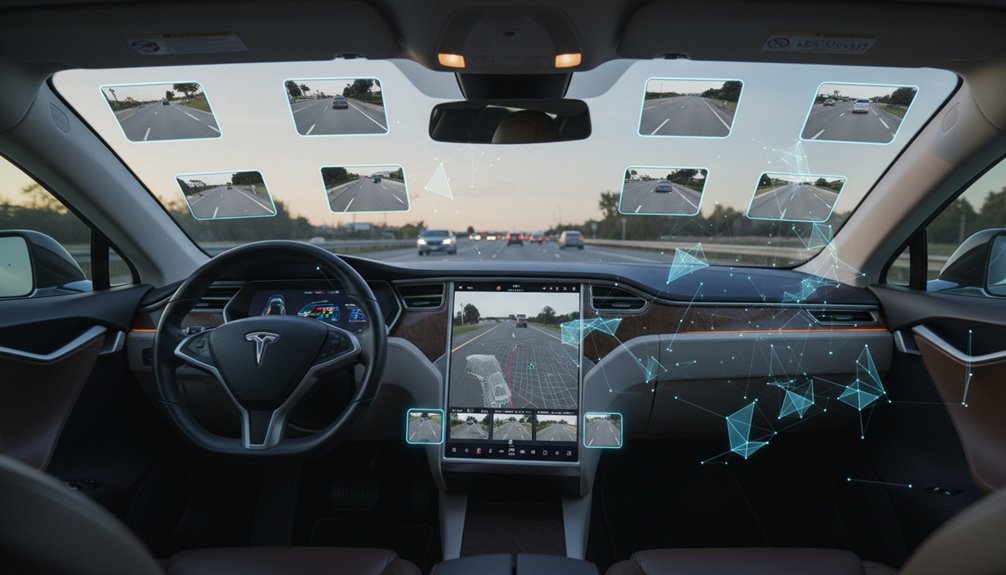

Tesla’s vehicles already have the hardware needed for this work. Eight cameras provide 360-degree coverage of everything around the car. This vision-based sensor setup feeds information into Tesla’s neural network processing systems, which process multitask learning for various perception tasks concurrently. All of this happens alongside regular vehicle functions, often without drivers even knowing it’s occurring.

The collected data gets especially useful during challenging driving situations. Unprotected left turns and complex intersections produce significant learning moments. When human drivers make decisions differently than the algorithm would, Tesla’s engineers analyze why. This real-world data helps them comprehend gaps between their computer simulations and actual road performance.

Tesla’s shadow mode didn’t appear overnight. The company committed to a vision-based autonomous approach back in 2016. It’s evolved through multiple hardware versions and represents part of Tesla’s long-term plan for full autonomy. The system connects everything through Tesla’s unified software design. This continuous learning approach has helped Tesla collect data from nine billion miles of real-world driving to refine their autonomous systems.

However, shadow mode has real limitations. It doesn’t work well in poor visibility like rain, snow, or darkness. Tunnels and bright light situations can interfere with the cameras. The system only operates for drivers with high safety scores above 45 percent. Despite being passive, it still requires human supervision and can’t drive the vehicle independently.

Tesla’s shadow mode represents a passive testing strategy. It lets the company validate new algorithm versions before releasing them to the public. By comparing what the algorithm would do versus what drivers actually do, Tesla gathers the information needed to gradually improve its autonomous systems.